This class combines base learners. The base learner is defined by a data matrix calculated as row-wise tensor product of the two data matrices given in the base learners to combine.

Format

S4 object.

Arguments

- blearner1

(

Baselearner*)

First base learner.- blearner2

(

Baselearner*)

Second base learner.- blearner_type

(

character(1))

Type of the base learner (if not specified,blearner_type = "spline"is used). The unique id of the base learner is defined by appendingblearner_typeto the feature name:paste0(blearner1$getDataSource()getIdentifier(), "_", blearner2$getDataSource()getIdentifier(), "_", blearner_type).- anisotrop

(

logical(1))

Defines how the penalty is added up. Ifanisotrop = TRUE, the marginal effects of the are penalized as defined in the underlying factories. Ifanisotrop = FALSE, an isotropic penalty is used, which means that both directions gets penalized equally.

Usage

BaselearnerTensor$new(blearner1, blearner2, blearner_type)

BaselearnerTensor$new(blearner1, blearner2, blearner_type, anisotrop)Methods

$summarizeFactory():() -> ()$transfromData(newdata):list(InMemoryData) -> matrix()$getMeta():() -> list()

Inherited methods from Baselearner

$getData():() -> matrix()$getDF():() -> integer()$getPenalty():() -> numeric()$getPenaltyMat():() -> matrix()$getFeatureName():() -> character()$getModelName():() -> character()$getBaselearnerId():() -> character()

Examples

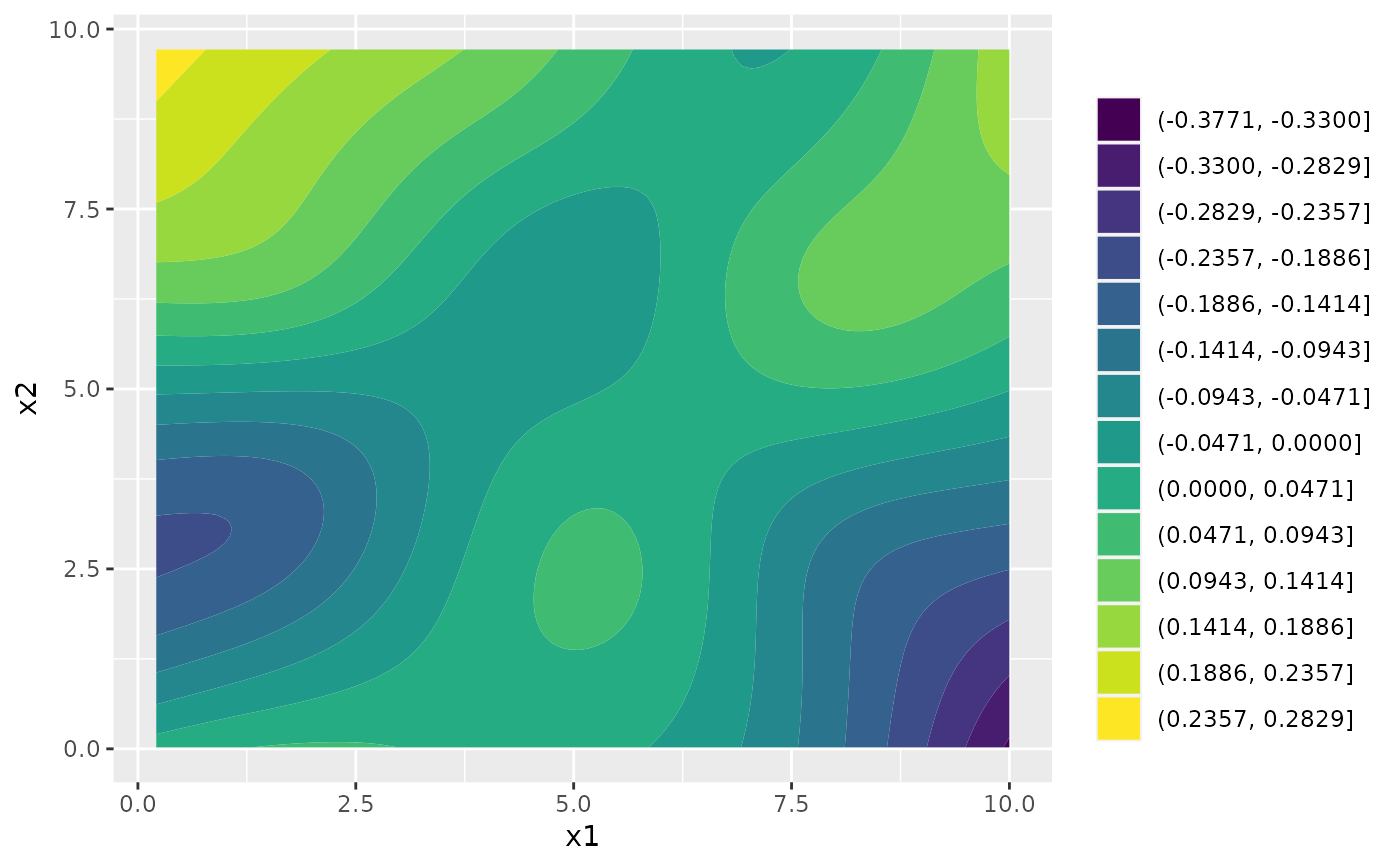

# Sample data:

x1 = runif(100, 0, 10)

x2 = runif(100, 0, 10)

y = sin(x1) * cos(x2) + rnorm(100, 0, 0.2)

dat = data.frame(x1, x2, y)

# S4 wrapper

# Create new data object, a matrix is required as input:

ds1 = InMemoryData$new(cbind(x1), "x1")

ds2 = InMemoryData$new(cbind(x2), "x2")

# Create new linear base learner factory:

bl1 = BaselearnerPSpline$new(ds1, "sp", list(n_knots = 10, df = 5))

bl2 = BaselearnerPSpline$new(ds2, "sp", list(n_knots = 10, df = 5))

tensor = BaselearnerTensor$new(bl1, bl2, "row_tensor")

# Get the transformed data:

dim(tensor$getData())

#> [1] 196 100

# Get full meta data such as penalty term or matrix as well as knots:

str(tensor$getMeta())

#> List of 3

#> $ df : num [1:2, 1] 5 5

#> $ penalty : num [1:2, 1] 15.3 15.6

#> $ penalty_mat: num [1:196, 1:196] 30.9 -31.1 15.6 0 0 ...

# Transform "new data":

newdata = list(InMemoryData$new(cbind(runif(5)), "x1"),

InMemoryData$new(cbind(runif(5)), "x2"))

str(tensor$transformData(newdata))

#> List of 1

#> $ design:Formal class 'dgCMatrix' [package "Matrix"] with 6 slots

#> .. ..@ i : int [1:80] 0 2 4 0 1 2 3 4 0 1 ...

#> .. ..@ p : int [1:197] 0 3 8 13 18 20 20 20 20 20 ...

#> .. ..@ Dim : int [1:2] 5 196

#> .. ..@ Dimnames:List of 2

#> .. .. ..$ : NULL

#> .. .. ..$ : NULL

#> .. ..@ x : num [1:80] 3.33e-03 1.67e-07 5.85e-04 5.73e-02 2.74e-03 ...

#> .. ..@ factors : list()

# R6 wrapper

cboost = Compboost$new(dat, "y")

cboost$addTensor("x1", "x2", df = 5)

cboost$train(50, 0)

#> Train 50 iterations in 0 Seconds.

#> Final risk based on the train set: 0.12

#>

table(cboost$getSelectedBaselearner())

#>

#> x1_x2_tensor

#> 50

plotTensor(cboost, "x1_x2_tensor")